背景

本文主要是针对压测工具fio的回放能力进行分析,

最终找到基于iolog来让fio产生用户指定的压力,

比如构造不同时刻不同的客户端iops压力,

亦或是产生存在显著数据命中热点的压力, 可用在诸如异常构造等问题上.

可以基于该iolog原理, 编写各自模型的iolog生成器 来满足 定制化需求.

replay的iolog解读

有2种可供回放的格式

blkparse的bin文件, 支持的比较多纯文本的iolog格式,

常用v2和v3

正常使用bin文件的方式

采集

1 2 blktrace /dev/sdb1 blkparse sdb1 -d dd.bin >/dev/null

replay io

1 2 3 4 fio --direct=1 --read_iolog="dd.bin" --replay_redirect=/dev/sdc1 --name=replay --replay_no_stall=1 --numjobs=1 --ioengine=libaio --iodepth=32 fio --read_iolog=../bb.bin --filename=fio-rand-read --name=a

iolog使用方式

rbd引擎生成的iolog是v2协议

文件头指定fio version 2 iolog

然后声明job对应的action

1 filename action offset length

action

wait

read

write

sync

datasync

trim

样例如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 fio version 2 iolog rbd_13.0.0 add rbd_13.0.0 open rbd_13.0.0 write 893865984 4096 rbd_13.0.0 write 9905799168 4096 rbd_13.0.0 write 6045495296 4096 rbd_13.0.0 write 5778386944 4096 rbd_13.0.0 write 9706029056 4096 rbd_13.0.0 write 1973067776 4096 rbd_13.0.0 write 3528716288 4096 rbd_13.0.0 write 6849687552 4096 rbd_13.0.0 write 2277048320 4096 rbd_13.0.0 write 7225700352 4096 rbd_13.0.0 write 5898452992 4096 rbd_13.0.0 write 5612314624 4096 rbd_13.0.0 write 10423967744 4096 rbd_13.0.0 write 8727756800 4096 rbd_13.0.0 write 5164285952 4096 rbd_13.0.0 write 4583624704 4096 rbd_13.0.0 write 4850122752 4096 rbd_13.0.0 write 86384640 4096 rbd_13.0.0 write 6490755072 4096 rbd_13.0.0 write 7782293504 4096 rbd_13.0.0 write 122646528 4096 rbd_13.0.0 write 8404697088 4096 rbd_13.0.0 write 1540767744 4096 rbd_13.0.0 write 206385152 4096 rbd_13.0.0 write 9246814208 4096 rbd_13.0.0 write 2709151744 4096 rbd_13.0.0 write 7710785536 4096 rbd_13.0.0 write 2957721600 4096 rbd_13.0.0 write 7532285952 4096 rbd_13.0.0 write 52547584 4096 rbd_13.0.0 write 4910313472 4096 rbd_13.0.0 write 4400508928 4096 rbd_13.0.0 write 1650491392 4096 rbd_13.0.0 write 2253017088 4096 rbd_13.0.0 write 8878170112 4096 rbd_13.0.0 write 7537848320 4096 rbd_13.0.0 write 9147822080 4096 rbd_13.0.0 write 4819779584 4096 rbd_13.0.0 write 907501568 4096 rbd_13.0.0 write 3035762688 4096 rbd_13.0.0 write 7090388992 4096 rbd_13.0.0 write 5126242304 4096 rbd_13.0.0 write 6447304704 4096 rbd_13.0.0 write 6967037952 4096 rbd_13.0.0 write 4684316672 4096 rbd_13.0.0 write 4559695872 4096

v3格式在2的基础上增加第一列时间戳,

可达到指定每秒的IOPS的效果, 精准回放.

1 100 rbd_13.0.0 write 6447304704 4096

以上代表在fio进程启动100纳秒时, 产生一个4K写

iolog的2类格式, fio如何识别?

iolog基于文件头magic解析2类格式,

识别blktrace还是iolog格式

较直观

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 # iolog.c bool init_iolog (struct thread_data *td) { bool ret; if (td->o.read_iolog_file) { int need_swap; char * fname = get_name_by_idx(td->o.read_iolog_file, td->subjob_number); if (is_blktrace(fname, &need_swap)) { td->io_log_blktrace = 1 ; ret = init_blktrace_read(td, fname, need_swap); } else { td->io_log_blktrace = 0 ; ret = init_iolog_read(td, fname); } free (fname); ... } ... # blktrace.c bool is_blktrace (const char *filename, int *need_swap) { struct blk_io_trace t ; int fd, ret; fd = open(filename, O_RDONLY); if (fd < 0 ) return false ; ret = read(fd, &t, sizeof (t)); close(fd); if (ret < 0 ) { perror("read blktrace" ); return false ; } else if (ret != sizeof (t)) { log_err("fio: short read on blktrace file\n" ); return false ; } if ((t.magic & 0xffffff00 ) == BLK_IO_TRACE_MAGIC) { *need_swap = 0 ; return true ; } t.magic = fio_swap32(t.magic); if ((t.magic & 0xffffff00 ) == BLK_IO_TRACE_MAGIC) { *need_swap = 1 ; return true ; } return false ; }

开源的iolog:

存储相关 各大评测规范(如SNIA)的测试集模型

SNIA - Storage Networking Industry

Association: IOTTA Repository Home

从这里可以下载到对应的iolog.bin的replay文件.

iolog中与ceph rbd关联

iolog中action对应的rbd接口

分别对应哪些rbd接口呢?

add

open

close

没用, 只是设置个标签

当关闭引擎的时候触发fio_rbd_cleanup, 再调用底层shutdown

action

wait

read

write

sync

datasync

trim

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 static int ipo_special (struct thread_data *td, struct io_piece *ipo) struct fio_file *f; int ret; if (ipo->ddir != DDIR_INVAL) return 0 ; f = td->files[ipo->fileno]; if (ipo->delay) iolog_delay (td, ipo->delay); if (fio_fill_issue_time (td)) fio_gettime (&td->last_issue, NULL ); switch (ipo->file_action) { case FIO_LOG_OPEN_FILE: if (td->o.replay_redirect && fio_file_open (f)) { dprint (FD_FILE, "iolog: ignoring re-open of file %s\n" , f->file_name); break ; } ret = td_io_open_file (td, f); if (!ret) break ; td_verror (td, ret, "iolog open file" ); return -1 ; case FIO_LOG_CLOSE_FILE: td_io_close_file (td, f); break ; case FIO_LOG_UNLINK_FILE: td_io_unlink_file (td, f); break ; case FIO_LOG_ADD_FILE: break ; default : log_err ("fio: bad file action %d\n" , ipo->file_action); break ; } return 1 ; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 static enum fio_q_status fio_rbd_queue (struct thread_data *td, struct io_u *io_u) struct rbd_data *rbd = td->io_ops_data; struct fio_rbd_iou *fri = io_u->engine_data; int r = -1 ; fio_ro_check (td, io_u); fri->io_seen = 0 ; fri->io_complete = 0 ; r = rbd_aio_create_completion (fri, _fio_rbd_finish_aiocb, &fri->completion); if (r < 0 ) { log_err ("rbd_aio_create_completion failed.\n" ); goto failed; } if (io_u->ddir == DDIR_WRITE) { r = rbd_aio_write (rbd->image, io_u->offset, io_u->xfer_buflen, io_u->xfer_buf, fri->completion); if (r < 0 ) { log_err ("rbd_aio_write failed.\n" ); goto failed_comp; } } else if (io_u->ddir == DDIR_READ) { r = rbd_aio_read (rbd->image, io_u->offset, io_u->xfer_buflen, io_u->xfer_buf, fri->completion); if (r < 0 ) { log_err ("rbd_aio_read failed.\n" ); goto failed_comp; } } else if (io_u->ddir == DDIR_TRIM) { r = rbd_aio_discard (rbd->image, io_u->offset, io_u->xfer_buflen, fri->completion); if (r < 0 ) { log_err ("rbd_aio_discard failed.\n" ); goto failed_comp; } } else if (io_u->ddir == DDIR_SYNC) { r = rbd_aio_flush (rbd->image, fri->completion); if (r < 0 ) { log_err ("rbd_flush failed.\n" ); goto failed_comp; } } else { dprint (FD_IO, "%s: Warning: unhandled ddir: %d\n" , __func__, io_u->ddir); r = -EINVAL; goto failed_comp; } return FIO_Q_QUEUED; failed_comp: rbd_aio_release (fri->completion); failed: io_u->error = -r; td_verror (td, io_u->error, "xfer" ); return FIO_Q_COMPLETED; }

采用librbd用户态接口访问时,

如何采集回放?

rbd map映射出来, 读取接口

lttng用户态采集

这里方案2社区有样例 ### lttng采集

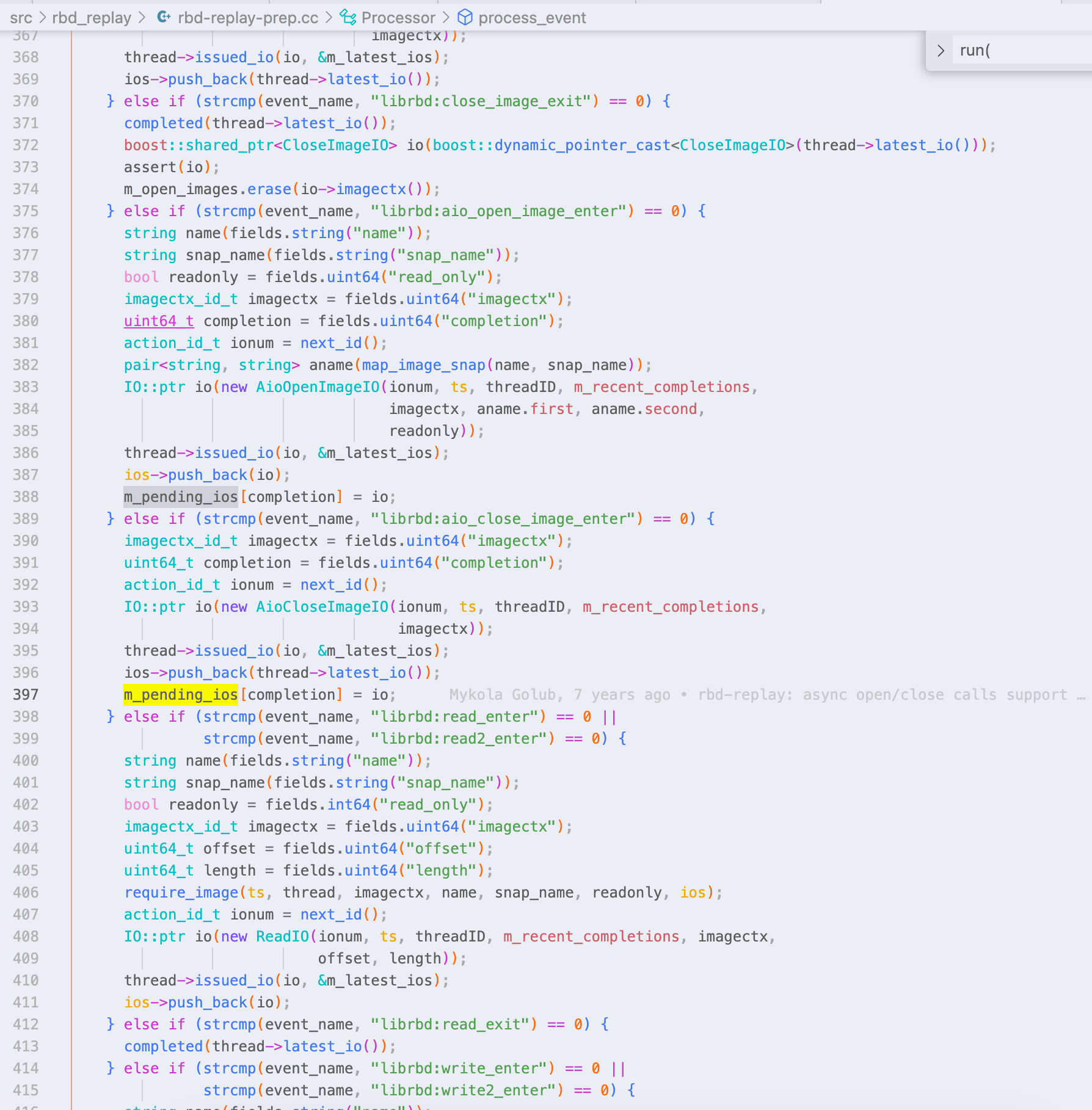

RBD Replay

— Ceph Documentation

Capture the trace. Make sure to capture pthread_id context:

打开下述debug开关 1 2 3 rbd_tracing osd_tracing rados_tracing

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 lttng-sessiond --daemonize mkdir -p traceslttng create -o traces librbd lttng enable-event -u 'librbd:*' lttng add-context -u -t pthread_id lttng start lttng stop rbd-replay-prep traces/ust/uid/*/* replay.bin rbd-replay --read-only replay.bin

这里的rbd-replay代码基本上就是单独解析的lttng采集到的埋点格式了

FAQ

TODO:为什么我blkparse得到的bin文件是前八位是7407 6561,

而不是那个代码中的0xffffff00呢

fio不支持对多线程io回放.

只能是用merge-blktrace-file合并后再进行处理.

目前初步实验来看, 采集或者生成多个job的时候,

最好让不同job的write_log 文件独立,

否则可能存在因同时追加写入冲突, 行内的格式出现错误,

导致执行时报解析格式错误.

然后得到独立的iolog文件后,

再使用fio --read_iolog="<file1>:<file2>" --merge_blktrace_file="<output_file>"来进行多个job文件的合并

然后就可以正常使用1个iolog文件使用read_log选项对多个job进行测试了.

最后更新时间:2023-09-06 15:14:55