背景及初步定位

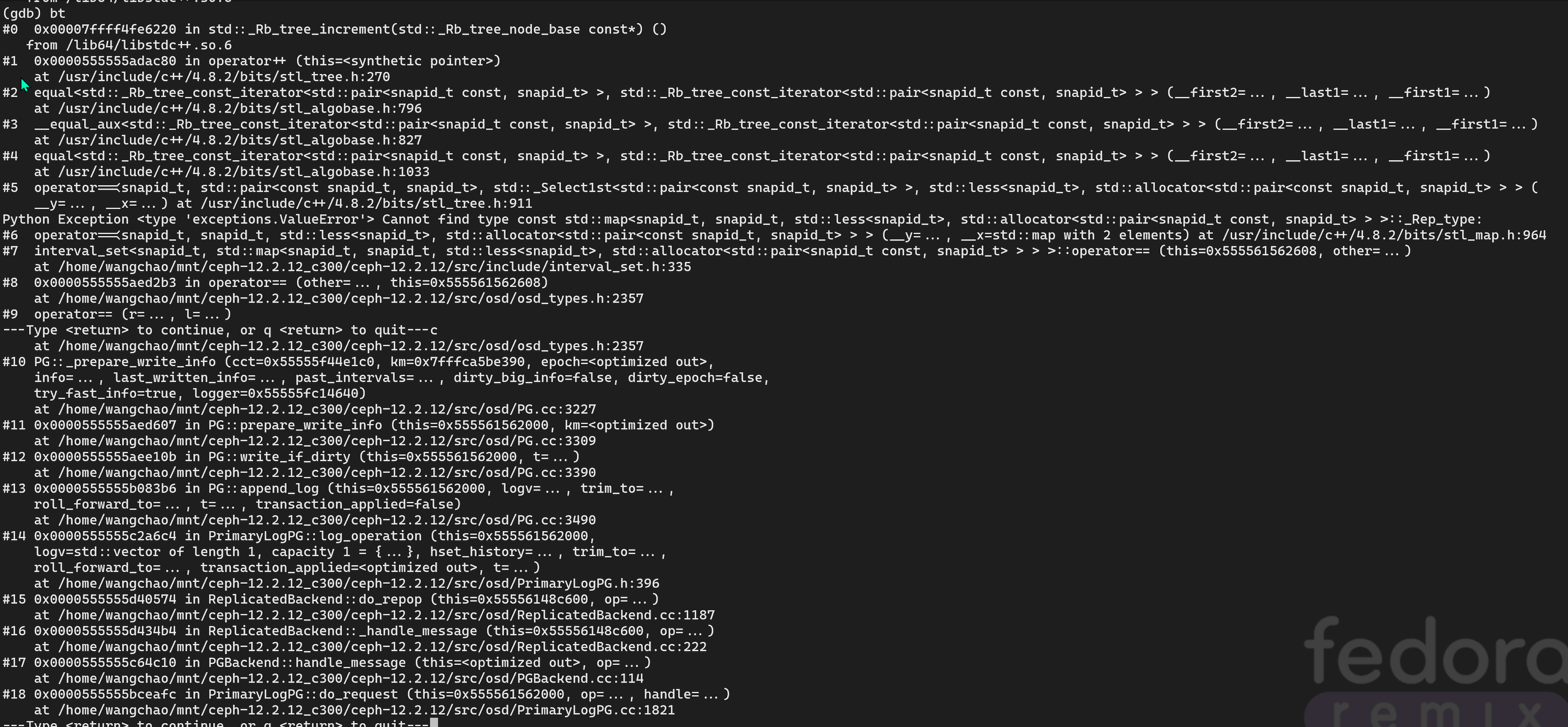

背景是通过perf捕获ceph-osd的cpu消耗, 发现在下述环节极高.

1 | --10.64%--PG::_prepare_write_info |

由于找不到现场版本的debuginfo了, 因此姑且先推导一下.

interset的==, 对应是map的==,

_Rb_tree_increment初步定位应该是 map的迭代器调用的?

1 | bool operator==(const interval_set& other) const { |

1 | _Self& |

根据上述Map的内部实现来看, 基本就是他了, 比较map,

根据下述stl实现来看, 确实是operator==产生的大量迭代

1 | //迭代器基类 |

包含debuginfo后

定位到purged_snaps后

ceph的快照删除官方文档如下,

因此purged_snaps高在业务场景很正常.

SNAP REMOVAL To remove a snapshot, a request is made to the Monitor cluster to add the snapshot id to the list of purged snaps (or to remove it from the set of pool snaps in the case of pool snaps). In either case, the PG adds the snap to its snap_trimq for trimming.

A clone can be removed when all of its snaps have been removed. In order to determine which clones might need to be removed upon snap removal, we maintain a mapping from snap to hobject_t using the SnapMapper.

See PrimaryLogPG::SnapTrimmer, SnapMapper

This trimming is performed asynchronously by the snap_trim_wq while the pg is clean and not scrubbing.

The next snap in PG::snap_trimq is selected for trimming

We determine the next object for trimming out of PG::snap_mapper. For each object, we create a log entry and repop updating the object info and the snap set (including adjusting the overlaps). If the object is a clone which no longer belongs to any live snapshots, it is removed here. (See PrimaryLogPG::trim_object() when new_snaps is empty.)

We also locally update our SnapMapper instance with the object’s new snaps.

The log entry containing the modification of the object also contains the new set of snaps, which the replica uses to update its own SnapMapper instance.

The primary shares the info with the replica, which persists the new set of purged_snaps along with the rest of the info.

RECOVERY Because the trim operations are implemented using repops and log entries, normal pg peering and recovery maintain the snap trimmer operations with the caveat that push and removal operations need to update the local SnapMapper instance. If the purged_snaps update is lost, we merely retrim a now empty snap.

快照删除流程: 当需要删除快照时,会向Monitor集群发起请求,将该快照ID加入已清除快照列表(对于存储池快照则是从池快照集合中移除)。无论哪种情况,PG都会将该快照加入其snap_trimq队列等待修剪。

克隆删除条件: 只有当某个克隆的所有快照都被删除后,该克隆才能被移除。为了确定哪些克隆可能在快照删除时需要被移除,我们通过SnapMapper维护了从快照到hobject_t的映射关系。

异步修剪机制: 修剪操作由snap_trim_wq在PG处于干净状态且未进行清洗时异步执行。系统会从PG::snap_trimq中选择下一个待修剪的快照。

对象修剪流程: 对于每个对象,我们会创建日志条目和repop操作来更新对象信息和快照集(包括调整重叠部分)。如果对象是已不属于任何存活快照的克隆体,则在此处被移除。

映射表更新: 我们同时会使用对象的新快照信息更新本地的SnapMapper实例。包含对象修改的日志条目也会记录新的快照集合,副本节点利用这些信息更新自己的SnapMapper实例。

数据同步机制: 主节点会与副本节点共享这些信息,副本节点会将新的purged_snaps集合与其他信息一起持久化。

恢复机制: 由于修剪操作是通过repop和日志条目实现的,常规的PG peering和恢复过程会维持快照修剪操作,但需要注意推送和删除操作需要更新本地SnapMapper实例。如果purged_snaps更新丢失,系统仅会重新修剪一个现已为空的快照。

目前可以通过ceph pg 2.892 query 查到对应pg的purged_snaps, 足足有2412条, 确实数量很大, 对应的迭代高也看来合理.

~~那剩下就是为啥有的osd 有这个的情况下cpu消耗不高了? ~~该问题可忽略, 后定位节点间cpu型号性能有差异, osd cpu消耗低的节点cpu性能确实更好.

_prepare_write_info的

call trace的触发逻辑?

主要是pglog的正常IO处理流程

高版本修复了该设计问题

1 | removed_snaps_queue [8f1~1,8f4~1] |

查看16版本环境, 发现好像是改成了removed_snaps_queue,

且purged_snaps在pg query里也看不到了.

初步看, 虽然pg_info还比较purged_snaps,

但是这项大部分时间为空了. 即不存在该版本的问题了

根据这条PRmon,osd,osdc: refactor snap trimming (phase 1) by liewegas · Pull Request #18276 · ceph/ceph, 提到曾经设计在这里提到过 Ceph Etherpad 时间轴 (注意, 只有4697版本可以看, 之后2020年被人用了机翻.)

在19年, 15版本分支中做了73条commit修改, osd,mon: remove pg_pool_t::removed_snaps by liewegas · Pull Request #28330 · ceph/ceph (github.com)

看Phase 2和3: remove SnapSet::snaps,

好像在17版本已经准备去除了?

目标

snaps are per-pool, so we should annouce deletion via OSDMap

successful purge is the intersection of all pool PGs purged_snaps

once a pool has purged, we can remove it from removed_snaps AND purged_snaps.

- this should also be announced via the OSDMap

mon and osd need the full removed set, but it can be global (and mostly read-only) once each pool has purged?

- 基于资源池的snap id信息, 通过osdmap公告

- 成功的清理, 是所有池的pool pg上的purged_snaps的交集

- 一旦池清理完毕, 可以从removed_snaps和purged_snaps去除该snap id.

- 也应该通过osdmap公告

- mon和osd需要完整的removed快照集合, 一旦池清理完毕,就可以是全局完整和只读的

主要差异总结:

| -- | planD | planC | planB | planA |

|---|---|---|---|---|

| osdmap相关 | 仅维护当前正在删除和删除完正在purged的snap | pg_pool_t增加recent_removed_snaps_lb_epoch, 维护该epoch后删除的snap列表 | pg_pool_t增加代表最早的deletion操作的removed_snaps_lb_epoch维护, new_purged_snaps维护在该epoch之后的 | 只维护deleted_snaps |

| 请求 | - | 请求参数中增加removed_snaps和purged_snaps的snapc | 请求参数中增加removed_snaps和purged_snaps的snapc | 维护一个序号, 仅当存在比这个序号低的snap信息, 才去访问old_purged_snaps, 否则忽略 |

| pginfo | pginfo中维护removed_snaps和purged_snaps | - | - | |

| mon | 维护删除操作的epoch | 维护删除操作的epoch, 并负责根据定义的窗口大小更新osdmap的recent_removed_snaps | 维护删除操作的epoch, 定义一个时间周期参数, 满足该周期, 聚拢最早的删除的快照, 从而更新removed_snaps_lb_epoch | 每个周期(如100个osdmap)更新purged_snaps的快照interval_set |

解读phase-1

1 | 本次pr之前已增加了13版本的逻辑, 保留了12版本功能 |

TODO: 这里的15版本之前的兼容性是为了什么服务的呢?

osd/PG: use new mimic osdmap structures for removed, pruned snaps · ceph/ceph@6e1b7c4

在开始pr前, 通过该修改调整了osdmap等的格式?

1 | --- |

1 | --- |

梳理FAQ

大方向梳理

升级更新

- OSDMontior.cc: 处理OSDMap::Increment

- new_removed_snaps

- 处理mon数据库中的snap信息 OSD_SNAP_PREFIX

- new_removed_snaps

- OSDMontior.cc: 处理OSDMap::Increment

osdmap更新

removed_snaps 归档

removed_snap_queue中

新增的newly_removed_snaps和newly_purged_snaps的维护

PGPool::update(CephContext *cct, OSDMapRef map)- new_removed_snaps

PG::activate(

- 13版本 snap_trimq 用removed_snaps_queue, 12版本继续用cached_removed_snaps

- 13版本的话, 并将pg_info_t中的purged_snaps更新成当前仅需要放的部分, 不再维持那么多, 12版本维持不变.

PG::publish_stats_to_osd()

- 13版本后从pg_info_t中将purged_snaps更新到pg_stat_t中, 12版本的话, 该osd依旧不提供该信息

PG::RecoveryState::Active::react(const AdvMap& advmap)

- 13版本则用新逻辑, 且如果该pg的上个版本last_require_osd_release是12,

则snap_trimq减去pg_info_t中的purged_snaps. TODO:这块持疑问,

是因为snap_trimq来源的地方复用?

- 12版本继续用newly_removed_snaps

- 13版本则用新逻辑, 且如果该pg的上个版本last_require_osd_release是12,

则snap_trimq减去pg_info_t中的purged_snaps. TODO:这块持疑问,

是因为snap_trimq来源的地方复用?

PGPool 初始化

- 12版本, 需要构造cached_removed_snaps

pg_info_t中的removed_snaps清理

pg_stat_t中的removed_snaps维护

涉及pg激活流程? 以及快照删除状态机的流程

通信流程串起来

- pg_pool_t::removed_snaps 用在用户定义场景

- unmanaged_snap

- OSDMonitor::prepare_remove_snaps

- OSDMonitor::preprocess_remove_snaps

- pg_pool_t::add_unmanaged_snap

- pg_pool_t::remove_unmanaged_snap

- pg_pool_t::encode

- pg_pool_t::decode

- const mempool::osdmap::map<int64_t,pg_pool_t>& pools�

- 响应osdmap, 创建/删除快照, 确实会带来osdmap变更

- OSDMap::Incremental::propagate_snaps_to_tiers

- pool属性的全局snaps

- pg_pool_t::build_removed_snaps

- snap existence/non-existence defined by snaps[] and snap_seq

- 当未生成removed_snaps时, 查询pg_pool_t->snaps, 遍历当前存在哪些快照, 生成该项

- pg_pool_t::maybe_updated_removed_snaps

- PGPool::update

- pg_pool_t::add_snap

- pg_pool_t::remove_snap

- pg_pool_t::build_removed_snaps

- unmanaged_snap

- PGPool::cached_removed_snaps // current removed_snaps set

应该是正在干活的

- PGPool::update

- PG::handle_advance_map(

- 检测到当前osd内存里的cached_removed_snaps没有osdmap里的removed_snaps新了,

则更新cached_removed_snaps为新的removed_snaps,

newly_removed_snaps为subtract后的差.

- 如果不是子集, 则直接更换cached_removed_snaps.

- PG::activate

- 作为主pg时, 设置snap_trimq为cached_removed_snaps, 并去除pg_info_t中的purged_snaps

- 需要更新pglog时, 发送MOSDPGLog, 包括本pg的purged_snaps

- PG::handle_advance_map

- 开启调试选项osd_debug_verify_cached_snaps时, 从build_removed_snaps生成一次removed_snaps进行比较, 校验通过才能继续

- PGPool::update

- PGPool::newly_removed_snaps // newly removed in the last epoch

最新删除的

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- pg->snap_trimq.union_of(pg->pool.newly_removed_snaps);

- 收到重构事件时, 检测到newly_removed_snap非空, 则合并到snap_trimq中. 从而用于干活

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- pg_info_t::purged_snaps

- pg_info_t::encode

- pg_info_t::decode

bool operator==(const pg_info_t& l, const pg_info_t& r)- PG::activate

PG::split_into(pg_t child_pgid, PG *child, unsigned split_bits)PG::_prepare_write_info- 根据环境里看到dirty_big_info 应该为false

- 在提供pg_info时, 临时将purged_snaps置空, encode完再还原 map<string,bufferlist> km;

- PG::read_info

- PG::filter_snapc

- PG::proc_primary_info

- PG::RecoveryState::ReplicaActive::react(const MInfoRec& infoevt) 重构恢复时, 更新pg_info_t的purged_snaps

- PGLog::merge_log(

- PrimaryLogPG::AwaitAsyncWork::react(const DoSnapWork&)

- pg_info_t中的purged_snaps 添加get_next_objects_to_trim的 snap_to_trim

- pg的snap_trimq清理掉snap_to_trim

- PGTransaction::ObjectOperation::updated_snaps�

- PG::snap_mapper

- PG::clear_object_snap_mapping

- PG::update_object_snap_mapping

- PG::update_object_snap_mapping

- PG::update_snap_map

PG::_scan_snaps(ScrubMap &smap)- scrub的时候触发, 似乎只是校验快照元数据, 本身不起啥作用.

- TODO:scrub校验侧逻辑

- PrimaryLogPG::on_local_recover(

- PrimaryLogPG::AwaitAsyncWork::react(const DoSnapWork&)

- snapset

- legacy看着是12版本以下, 更早的时候的

- 首先所有进程重启, 此时, 进程空间知道自身feature为13了

- 检测osdmap的继续走12版本逻辑

- 检测HAVE_FEATURE的, 则都会进入新逻辑? 这里有问题?

- 此时将require_osd_release更新为13

- 此时osdmap的也都触发新逻辑, 但是osd通信总有先后, 意味着存在13版本和12版本通信的情况. 此时怎么保障部崩溃?

- osd和mgr通信也是一点.

- pg_stat_t

- pg状态机

- mon与osd通信的, 新的gap_removed_snaps的兼容性.

测试场景

着重观察 升级后 * mgr与osd通信的pg_stat_t新增对象的解析 * mon与osd的历史gap_removed_snaps的处理 * 查询与新增写入 * pg_info_t转到pg_stat_t * osdmap/pg_stat_t/pg_info_t/MOSDMap的编解码

稳定性 1. mgr升级后 1. 与低版本通信. 2. 全服务重启 3. 正常业务测试 2. mgr/mon升级后, 1. 与低版本通信 2. 全服务重启 3. 正常业务性能/功能接口测试 3. mgr/mon/osd局部升级后 1. 与低版本通信 2. 全服务重启 3. 正常业务性能/功能接口测试 4. mgr/mon/osd全部升级后 1. 与低版本通信 2. 全服务重启 3. 正常业务性能/功能接口测试 5. qa/unittest中, 是否存在相关测试集? 1. test_snap_mapper.cc 1. snaps 2. testPGLog.cc 1. pg_info_t中的purged_snaps

mon更新数据库持久化的效果? 以及性能影响

12版本的设计实现中, 是如何持久化的? 且都在哪些地方使用到?

- pg_pool_t::removed_snaps 用在用户定义场景

- unmanaged_snap

- OSDMonitor::prepare_remove_snaps

- OSDMonitor::preprocess_remove_snaps

- pg_pool_t::add_unmanaged_snap

- pg_pool_t::remove_unmanaged_snap

- pg_pool_t::encode

- pg_pool_t::decode

- OSDMap::Incremental::propagate_snaps_to_tiers

- pool属性的全局snaps

- pg_pool_t::build_removed_snaps

- snap existence/non-existence defined by snaps[] and snap_seq

- 当未生成removed_snaps时, 查询pg_pool_t->snaps, 遍历当前存在哪些快照, 生成该项

- pg_pool_t::maybe_updated_removed_snaps

- PGPool::update

- pg_pool_t::add_snap

- pg_pool_t::remove_snap

- pg_pool_t::build_removed_snaps

- unmanaged_snap

- PGPool::cached_removed_snaps // current removed_snaps set

应该是正在干活的

- PGPool::update

- PG::handle_advance_map(

- 检测到当前osd内存里的cached_removed_snaps没有osdmap里的removed_snaps新了,

则更新cached_removed_snaps为新的removed_snaps,

newly_removed_snaps为subtract后的差.

- 如果不是子集, 则直接更换cached_removed_snaps.

- PG::activate

- 作为主pg时, 设置snap_trimq为cached_removed_snaps, 并去除pg_info_t中的purged_snaps

- 需要更新pglog时, 发送MOSDPGLog, 包括本pg的purged_snaps

- PG::handle_advance_map

- 开启调试选项osd_debug_verify_cached_snaps时, 从build_removed_snaps生成一次removed_snaps进行比较, 校验通过才能继续

- PGPool::update

- PGPool::newly_removed_snaps // newly removed in the last epoch

最新删除的

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- pg->snap_trimq.union_of(pg->pool.newly_removed_snaps);

- 收到重构事件时, 检测到newly_removed_snap非空, 则合并到snap_trimq中. 从而用于干活

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- pg_info_t::purged_snaps

- pg_info_t::encode

- pg_info_t::decode

bool operator==(const pg_info_t& l, const pg_info_t& r)- PG::activate

PG::split_into(pg_t child_pgid, PG *child, unsigned split_bits)PG::_prepare_write_info- 根据环境里看到dirty_big_info 应该为false

- 在提供pg_info时, 临时将purged_snaps置空, encode完再还原 map<string,bufferlist> km;

- PG::read_info

- PG::filter_snapc

- PG::proc_primary_info

- PG::RecoveryState::ReplicaActive::react(const MInfoRec& infoevt) 重构恢复时, 更新pg_info_t的purged_snaps

- PGLog::merge_log(

- PrimaryLogPG::AwaitAsyncWork::react(const DoSnapWork&)

- pg_info_t中的purged_snaps 添加get_next_objects_to_trim的 snap_to_trim

- pg的snap_trimq清理掉snap_to_trim

- PGTransaction::ObjectOperation::updated_snaps�

- PG::snap_mapper

- PG::clear_object_snap_mapping

- PG::update_object_snap_mapping

- PG::update_object_snap_mapping

- PG::update_snap_map

PG::_scan_snaps(ScrubMap &smap)- scrub的时候触发, 似乎只是校验快照元数据, 本身不起啥作用.

- TODO:scrub校验侧逻辑

- PrimaryLogPG::on_local_recover(

- PrimaryLogPG::AwaitAsyncWork::react(const DoSnapWork&)

- snapset

- legacy看着是12版本以下, 更早的时候的

SnapTrimmer状态机

1 | --- |

snap_mapper

1 | /** |

clones clone_snaps

snapSet

If the head is deleted while there are still clones, a snapdir object is created instead to house the SnapSet.

rbd 创建和删除快照 时间长 原因?

好像是mon响应慢了?

1 |

|

snapdir?

CEPH_SNAPDIR 说是通过clone保留了原始快照造出来的.

pg状态机和PGPool::update的关联

snaptrim逻辑

1 | rbd::Shell::execute() --> rbd::action::snap::execute_remove() --> rbd::action::snap::do_remove_snap() --> librbd::Image::snap_remove2() --> librbd::snap_remove() --> librbd::Operations<librbd::ImageCtx>::snap_remove() --> Operations<I>::snap_remove() --> librbd::Operations<librbd::ImageCtx>::execute_snap_remove() --> librbd::operation::SnapshotRemoveRequest::send() --> cls_client::snapshot_remove() --> ... --> 发送op给rbd_header对象所在的Primary OSD |

关键的trim_object和get_next_object_to_trim

这里应该是通过事务生成pglog里用的update_snaps设置snaps,然后正常pg逻辑里从pglog merge的? merge是直接merge的purged_snaps, 所以这里update的snaps是怎么更新成purged_snaps呢? 应该不是

1 | } else if (r == -ENOENT) { |

SnapTrimmer进入初始状态的条件?

1 | snap_trimmer_machine |

13版本的设计中, 初步理解是在mon的数据库中持久化, 那osd需要用到时, 是会产生一条新的和mon通信的协议吗?

- pg_pool_t::removed_snaps

- OSDMonitor::encode_pending(MonitorDBStore::TransactionRef t)

- 第一次升级的时候, 将removed_snaps转换为new_removed_snaps, 并写入到mon数据库中

- OSDMonitor::preprocess_remove_snaps(MonOpRequestRef op)

- OSDMonitor::prepare_remove_snaps(MonOpRequestRef op)

- "tier add --force-nonempty"

- pg_pool_t::is_removed_snap(snapid_t s) const

- pg_pool_t::build_removed_snaps(interval_set

& rs) const - pg_pool_t::maybe_updated_removed_snaps(const

interval_set

& cached) const - pg_pool_t::add_unmanaged_snap(uint64_t& snapid)

- pg_pool_t::remove_unmanaged_snap(snapid_t s)

- pg_pool_t::encode(bufferlist& bl, uint64_t features) const

- pg_pool_t::decode(bufferlist::iterator& bl)

- OSDMap::Incremental::propagate_snaps_to_tiers(CephContext *cct,

PGPool::update(CephContext *cct, OSDMapRef map)- 检测osdmap版本, 12版本旧逻辑, 13版本则清理掉12版本的newly_removed_snaps和cached_removed_snaps

- PG::activate

- osdmap 12版本, snap_trimq继续使用cached_removed_snaps

- 13版本, snap_trimq使用removed_snaps_queue

- OSDMonitor::encode_pending(MonitorDBStore::TransactionRef t)

- pg_pool_t::new_removed_snaps

- OSDMonitor::encode_pending(MonitorDBStore::TransactionRef t)

- OSDMonitor::prepare_remove_snaps(MonOpRequestRef op)

- OSDMonitor::prepare_pool_op(MonOpRequestRef op)

- OSDMap::Incremental::propagate_snaps_to_tiers(CephContext *cct

- OSDMap::Incremental::encode(bufferlist& bl, uint64_t features) const

- OSDMap::Incremental::decode(bufferlist::iterator& bl)

- OSDMap::apply_incremental(const Incremental &inc)

- OSDMap::encode(bufferlist& bl, uint64_t features) const

- OSDMap::decode(bufferlist::iterator& bl)

- const mempool::osdmap::map<int64_t,snap_interval_set_t>&

- PG::RecoveryState::Active::react(const AdvMap& advmap)

Objecter::_prune_snapc(- get_new_removed_snaps()

- pg_pool_t::new_purged_snaps

- OSDMonitor::encode_pending(MonitorDBStore::TransactionRef t)

- OSDMonitor::try_prune_purged_snaps()

- OSDMap::Incremental::encode(bufferlist& bl, uint64_t features) const

- OSDMap::Incremental::decode(bufferlist::iterator& bl)

- OSDMap::apply_incremental(const Incremental &inc)

- OSDMap::encode(bufferlist& bl, uint64_t features) const

- OSDMap::decode(bufferlist::iterator& bl)

- get_new_purged_snaps()

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- removed_snaps_queue

- OSDMonitor::encode_pending(MonitorDBStore::TransactionRef t)

- OSDMap::apply_incremental(const Incremental &inc)

- OSDMap::encode(bufferlist& bl, uint64_t features) const

- OSDMap::decode(bufferlist::iterator& bl)

- get_removed_snaps_queue()

- PG::activate

- osdmap版本大于13, 兼容

- PGMapDigest::purged_snaps

- OSDMonitor::try_prune_purged_snaps()

- PGMapDigest::encode(bufferlist& bl, uint64_t features) const

- PGMapDigest::decode(bufferlist::iterator& p)

- PGMap::calc_purged_snaps()

- pg_stat_t::purged_snaps

- pg_stat_t::encode(bufferlist &bl) const

- pg_stat_t::decode(bufferlist::iterator &bl)

operator==(const pg_stat_t& l, const pg_stat_t& r)

- pg_info_t::purged_snaps

- pg_info_t::encode(bufferlist &bl) const

- pg_info_t::decode(bufferlist::iterator &bl)

operator==(const pg_info_t& l, const pg_info_t& r)- PG::activate

- PG::split_into

- PG::publish_stats_to_osd()

PG::_prepare_write_info- PG::filter_snapc(vector

&snaps) - PG::proc_primary_info(ObjectStore::Transaction &t, const pg_info_t &oinfo)

- PG::RecoveryState::Active::react(const AdvMap& advmap)

- PGLog::merge_log

- PrimaryLogPG::AwaitAsyncWork::react(const DoSnapWork&)

- MOSDMap::mempool::osdmap::map<int64_t,OSDMap::snap_interval_set_t>

gap_removed_snaps;

- encode_payload

- decode_payload

- OSDMonitor::send_incremental(

- 在osd启动阶段, 会需要从mon拿到指定版本之间的MOSDMap?

- OSDMonitor::get_removed_snaps_range

- 取决于first_committed和当前osdmap版本, 是不是比如trim或者compact的时候, 这个first_commited才会更改? 否则mon内存里其实一直存着所有的removed_snaps? 确实

Objecter::_scan_requests(- Objecter::handle_osd_map

- 只有client和mgr的objecter客户端层, 这边一开始查下这个.

osd干活的时候就不再查了.

- 客户端查到之后用作什么? 好像主要是剪枝,

确保客户端的请求的snapset不再包含该快照版本? 减少误判?

- 比如客户端拿到的id是旧的场景吗?

- 客户端查到之后用作什么? 好像主要是剪枝,

确保客户端的请求的snapset不再包含该快照版本? 减少误判?

- 什么时候通知mon更新这个?

- 由osdmap的版本更新, 收到osdmap中的new_removed_snaps和removed_snaps的时候

mds中也有SnapServer, 也涉及该项, 需要了解该部分中的使用逻辑

removed_snaps的入口添加在哪里? 是怎么加入到increment的事务里的?

OSDMonitor::prepare_remove_snaps

MRemoveSnaps

void pg_pool_t::remove_snap(snapid_t s) { assert(snaps.count(s)); snaps.erase(s); snap_seq = snap_seq + 1; }

case POOL_OP_DELETE_SNAP: { snapid_t s = pp.snap_exists(m->name.c_str()); if (s) { pp.remove_snap(s); pending_inc.new_removed_snaps[m->pool].insert(s); changed = true; } } break;

Objecter::delete_pool_snap

removed_snaps 残留代表啥?

new_purged_snaps 实际执行?

PrimaryLogPG::kick_snap_trim()

snap_trimq应该是干活的, 这块状态机基本上没咋改应该.

moncap里提到了快照的注释?

mds如何使用的快照?

合入过程

忽略, 看错了. * osdmap的decode, 需要feature识别...但是有差别, 在快照前应该还有个v功能. 初步理解, 还是需要引入mimic这个版本的feature标记. 才能区分是老osd? 能不能不引入mimic, 直接引入feature识别?

SERVER_M 这个flag已经在了

12这个patch需要转换下. 把SERVER_MIMIC换成 SERVER_M

20这个patch需要查下pg_stat_t, 12版本是22, patch是23->24 涉及PR, 2条commit https://hub.nuaa.cf/ceph/ceph/pull/18058/commits

a25221e

结果这个修改, 立马就被重构掉了 osd_types.cc: don't store 32 least siognificant bits of state twice · ceph/ceph@0230fe6 · GitHub

也是2条commit的PR osd_types.cc: reorder fields in serialized pg_stat_t by branch-predictor · Pull Request #19965 · ceph/ceph · GitHub

ae7472f 0230fe6

目前初步看到的障碍是 , 12版本 snaptrimq_len还在invalid后面, 13-14也做了一此reorder, 其实也兼容

13版本是033d246 12版本是ca4413d

所以为了满足12版本的兼容性?

这里那12升13怎么保证兼容性?

根据ceph/osd_types.cc at nautilus · ceph/ceph · GitHub 可以知道14和12是保证了兼容性的.

pg_stat_t::decode(bufferlist::iterator &bl)

需要先合入osdmap增加的

27d6f43

中间0020左右, 手动把新的recovery_unfound给合入了, 暂时不知道为啥会合入这段, 手动合入了.osd_types.h的#define那段

然后0028, 在pg_pool_t::encode增加了v改到27 0030, PGMapDigest& get_digest 这个的修改依赖高版本将mon里的部分函数重构到mgr中的修改, 所以尝试性修改是维持不变. 根据12版本现状做处理

编译通过, 实际运行 2023-04-12 14:43:11.981804 7f44f6d7d700 0 log_channel(cluster) log [DBG] : pgmap v10: 192 pgs: 165 active+clean, 18 peering, 9 unknown; 180GiB data, 415GiB used, 1.20TiB / 1.61TiB avail 2023-04-12 14:43:12.871023 7f44fdd8b700 -1 failed to decode message of type 87 v1: buffer::malformed_input: void object_stat_collection_t::decode(ceph::buffer::list::iterator&) no longer understand old encoding version 2 < 122 2023-04-12 14:43:13.866212 7f4500d91700 -1 failed to decode message of type 87 v1: buffer::malformed_input: void object_stat_collection_t::decode(ceph::buffer::list::iterator&) no longer understand old encoding version 2 < 122 2023-04-12 14:43:13.982740 7f44f6d7d700 4 OSD 0 is up, but has no stats 2023-04-12 14:43:13.982742 7f44f6d7d700 2 wc osd 3avail: 71955388378 2023-04-12 14:43:13.982744 7f44f6d7d700 2 wc osd 4avail: 86134315413 2023-04-12 14:43:13.982744 7f44f6d7d700 2 wc osd 5avail: 61272017967 2023-04-12 14:43:13.982748 7f44f6d7d700 4 OSD 0 is up, but has no stats 2023-04-12 14:43:13.982749 7f44f6d7d700 2 wc osd 1avail: 827202140267

mgr无法处理osd发来的消息, 同理, osd上也有

搜到个scrub的测试脚本?

推荐的升级流程

The upgrade order starts with managers, monitors, then other daemons.

Each daemon is restarted only after Ceph indicates that the cluster will remain available.

compatv确实是2, 但是小于25, 那个25哪来的? 还有header.version的6 哪来的?

终于想起来了, mgr应该是没重新编译, mon也是

编译mgr没问题 ,mon需要 把PGMonitor.cc这里的int32_t改成 uint64_t, 因为pg数据结构改了 mempool::pgmap::unordered_map<uint64_t,int32_t> num_pg_by_state;

替换之后 , 崩在了

1 | ceph version 12.2.12 (1436006594665279fe734b4c15d7e08c13ebd777) luminous (stable) |

找到问题了,pg_stat_t里 之前合并purged_snaps合并错了, 应该是环境里的osd被我之前误启动已经污染了, 暂时跳过升级的验证项

先单纯验证功能

osdmap里 removed_snaps_queue 和pg query看不到pg_info里有purged_snaps.

好像是我新增的代码都没生效? 是要让他满足HAVE_FEATURES选项, 在OSDMap.cc里获取过程uint64_t OSDMap::get_encoding_features() const

if (require_osd_release < CEPH_RELEASE_MIMIC) { f &= ~CEPH_FEATURE_SERVER_MIMIC; }

monCommands.h里 require-osd-release需要增加 | mimic | | ----- | | 增加这段, 让她可以通过mimic

if (rel == osdmap.require_osd_release) {

// idempotent

err = 0;

goto reply;

}

assert(osdmap.require_osd_release >= CEPH_RELEASE_LUMINOUS);

if (rel == CEPH_RELEASE_MIMIC) {

if (!osdmap.get_num_up_osds() && sure != "--yes-i-really-mean-it") {

ss << "Not advisable to continue since no OSDs are up. Pass "

<< "--yes-i-really-mean-it if you really wish to continue.";

err = -EPERM;

goto reply;

}

if ((!HAVE_FEATURE(osdmap.get_up_osd_features(), SERVER_MIMIC))

&& sure != "--yes-i-really-mean-it") {

ss << "not all up OSDs have CEPH_FEATURE_SERVER_MIMIC feature";

err = -EPERM;

goto reply;

}

} else {

ss << "not supported for this release yet";

err = -EPERM;

goto reply;

}CEPH_FEATURES_ALL 增加MIMIC, 否则osd被判定为低版本的

合入了d9cd2d7 mon feature mimic的这个commit

ceph mon feature set mimic --yes-i-really-mean-it

目前没看到起到什么作用.

ceph features可以忽略, 即便是16/17, 打出来都是叫luminous

SIGNIFICANT_FEATURES 好像默认osdmap用的这个feature, 这里没加上MIMIC

启动后, pg_info里确实没了, pg_stat_t里确实也没看到,

] osd_snap / removed_epoch_1_00000f08 osd_snap / removed_epoch_39_00000f08 osd_snap / removed_epoch_39_00000f0b osd_snap / removed_epoch_39_00000f0e osd_snap / removed_epoch_39_00000f11 osd_snap / removed_epoch_39_00000f14 osd_snap / removed_epoch_39_00000f17 osd_snap / removed_epoch_39_00000f1a osd_snap / removed_epoch_39_00000f1d osd_snap / removed_epoch_39_00000f20 osd_snap / removed_epoch_39_00000f23 osd_snap / removed_epoch_39_00000f26 osd_snap / removed_epoch_39_00000f29 osd_snap / removed_epoch_39_00000f2c osd_snap / removed_epoch_39_00000f2f osd_snap / removed_epoch_39_00000f32 osd_snap / removed_epoch_39_00000f35 osd_snap / removed_epoch_39_00000f38 osd_snap / removed_epoch_39_00000f3b osd_snap / removed_epoch_39_00000f3e osd_snap / removed_epoch_39_00000f41 osd_snap / removed_epoch_39_00000f44 osd_snap / removed_epoch_39_00000f47 osd_snap / removed_epoch_39_00000f4a osd_snap / removed_epoch_39_00000f4d osd_snap / removed_epoch_39_00000f50 osd_snap / removed_epoch_39_00000f53 osd_snap / removed_epoch_39_00000f56 osd_snap / removed_epoch_39_00000f59 osd_snap / removed_epoch_39_00000f5c osd_snap / removed_epoch_39_00000f5f osd_snap / removed_epoch_39_00000f62 osd_snap / removed_epoch_39_00000f65 osd_snap / removed_epoch_39_00000f68 osd_snap / removed_epoch_39_00000f6b osd_snap / removed_epoch_39_00000f6e osd_snap / removed_epoch_39_00000f71 osd_snap / removed_epoch_39_00000f74 osd_snap / removed_epoch_39_00000f77 osd_snap / removed_epoch_39_00000f7a osd_snap / removed_epoch_39_00000f7d osd_snap / removed_epoch_39_00000f80 osd_snap / removed_epoch_39_00000f83 osd_snap / removed_epoch_39_00000f86 osd_snap / removed_epoch_39_00000f89 osd_snap / removed_epoch_39_00000f8c osd_snap / removed_epoch_39_00000f8f osd_snap / removed_epoch_39_00000f92 osd_snap / removed_epoch_39_00000f95 osd_snap / removed_epoch_39_00000f98 osd_snap / removed_epoch_39_00000f9b

- 看上去removed_snaps是生效了, 但是pg_stat_t里的purged_snaps好像没工作

- osdmap的removed_snaps_queue确实工作了, 但是旧的removed_snaps里的好像没清理掉.

TODO:解读phase 1.5

1 |

|